Everything on the EU AI Act in one Guide!

How to Make AI Agents GDPR-Compliant

'%3e%3cpath%20d='M26.6667%2020.022L30%2023.3553V26.6886H21.6667V36.6886L20%2038.3553L18.3333%2036.6886V26.6886H10V23.3553L13.3333%2020.022V8.35531H11.6667V5.02197H28.3333V8.35531H26.6667V20.022Z'%20fill='%230AA971'/%3e%3c/g%3e%3c/svg%3e) Summary

Summary

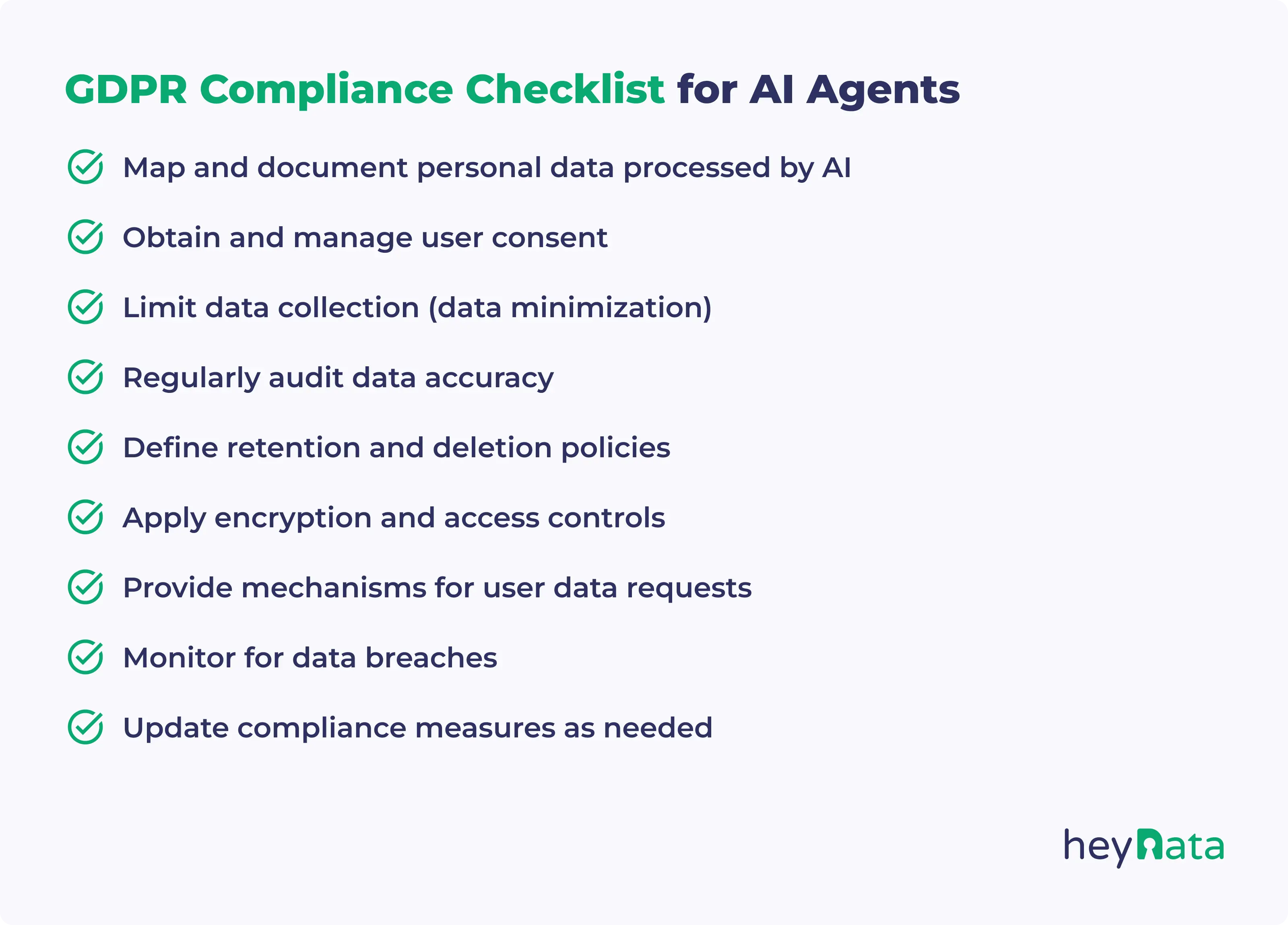

- AI agents must be GDPR-compliant by design and operation, especially when processing personal data or targeting users in the EU.

- Core data protection principles such as data minimization, transparency, purpose limitation, and accountability must be embedded from the start.

- User rights, access, rectification, erasure, and objection must be technically supported and easy to exercise.

- Ongoing compliance and monitoring are essential, including regular audits, updates, and readiness for additional regulations like the EU AI Act.

AI agents are revolutionizing how modern businesses operate. From autonomous chatbots to intelligent data enrichment tools, AI is increasingly embedded in everyday SaaS processes. But when personal data is involved, automation comes with a great deal of responsibility. This is where AI Agent GDPR compliance becomes essential, ensuring that every automated interaction respects user privacy and adheres to legal obligations under the GDPR.

GDPR sets strict rules about how personal data can be collected, processed, and stored. When AI agents touch this data, whether to respond to users, segment leads, or personalize experiences, they must comply with GDPR obligations.

However, building an AI agent that is GDPR-compliant isn't just a one-time task. It’s a process that spans design, deployment, and monitoring.

One of the most important principles to keep in mind from the outset is privacy by design. Under GDPR, this means building systems, like AI agents, with data protection built into their structure, not bolted on afterward. Compliance can’t be an afterthought. It has to be embedded in how the agent is planned, designed, and maintained.

In this guide, we will walk you through exactly what compliance means for AI agents, what legal and technical principles apply, and how to stay ahead in an increasingly regulated AI environment.

Table of Contents:

Do AI Agents Need to Be GDPR-Compliant?

Yes, if your AI agent processes personal data and you operate in or target users in the EU, it needs to be GDPR compliant.

An AI agent is a system that autonomously performs tasks based on inputs, logic, and training. Unlike static scripts, AI agents learn, adapt, and make decisions, often without explicit user intervention. In SaaS, these agents might handle tasks like:

- Answering customer service queries

- Enriching CRM data

- Processing consent forms

- Recommending content based on behavior

These agents frequently interact with personal data, such as names, emails, behavioral logs, or IP addresses, often to deliver personalized services or insights. And because the GDPR defines personal data broadly, even indirect handling of such data brings the agent under its regulatory scope.

This makes it essential for organizations to understand the compliance implications of deploying AI agents. The next sections break down the principles and safeguards needed to meet GDPR requirements effectively.

7 Core Principles of AI Agent GDPR Compliance

To ensure AI Agent GDPR compliance, you need to bake GDPR principles directly into how the agent operates.

1. Lawfulness, Fairness, Transparency

These principles ensure that AI agents handle personal data with a legitimate purpose, in a way that is clear, ethical, and understandable to users. This means users should not only know their data is being used but should also understand how and why.

How to ensure compliance:

- Identify and document a valid legal basis for every type of personal data processing. This could include user consent, fulfillment of a contract, or a legitimate business interest.

- Make sure your privacy policy clearly explains when AI agents are involved in data processing, what data they use, and for what purpose.

- Inform users when they are interacting with an AI system, especially when personal data influences the interaction. Use clear language such as "You're now chatting with our virtual assistant."

For example, if an AI onboarding assistant uses personal data such as employment history or contact details, it should display a short notice such as: "This assistant uses the data you provided to help guide you through the application process."

By implementing these steps, you can create a more transparent AI experience, foster user trust, and reduce the risk of regulatory scrutiny.

2. Purpose Limitation

This principle ensures that personal data is only used for the specific task it was collected for and not reused for unrelated or incompatible purposes. It's critical for building user trust and avoiding mission creep in AI functionality.

How to ensure compliance:

- Clearly define the purpose of the AI agent and its data uses in your internal documentation and privacy policy.

- Configure your AI system so that it cannot access or process data beyond what’s needed for its core function.

- Limit integrations with other systems unless the additional data usage has a valid legal basis and is communicated to users.

- Regularly audit your AI agent to ensure it hasn’t evolved to perform new functions that go beyond the original intent.

For example, if an AI agent is designed to handle customer support tickets, it should not extract product feedback data and send it to a marketing tool unless users have explicitly consented to such use.

By focusing your AI agent on a well-defined purpose, you reduce regulatory risk and keep users in control of how their data is used.

3. Data Minimization

Only collect and process the data strictly necessary for the AI agent’s functionality. This principle limits exposure to compliance risk and ensures user data is respected.

How to ensure compliance:

- Configure the agent’s permissions to access only data essential for the specific task it performs.

- Disable or restrict fields that go beyond operational needs, especially those that include sensitive or behavioral data.

- Regularly audit which data fields are being accessed by the agent and remove any that are no longer needed.

- Work with developers to map data flow and highlight areas where minimization can be improved.

For example, a support bot resolving password resets should only request username or email, not address, phone number, or purchase history, unless directly required to verify identity.

4. Accuracy

The agent must use accurate data and avoid generating or spreading misinformation. Inaccurate inputs or outputs can undermine trust, lead to poor decisions, and even violate users’ rights under GDPR.

How to ensure compliance:

- Validate data inputs before they enter the AI agent’s workflow. Use data integrity checks to catch outdated or clearly incorrect values.

- Build dynamic feedback loops so that when users or internal systems flag errors, corrections are integrated quickly and efficiently.

- Incorporate regular data refresh cycles. For example, set quarterly verification schedules for critical fields such as email, company name, or status.

- Establish escalation paths for decisions that appear to be based on outdated or clearly false information.

For example, a CRM enrichment agent should re-verify and update contact details, such as job title or company affiliation, every few months to avoid triggering incorrect lead scoring or outreach workflows.

5. Storage Limitation

Personal data processed or generated by AI agents should not be stored indefinitely. Storing data longer than necessary increases the risk of breaches, regulatory violations, and erodes user trust.

How to ensure compliance:

- Define clear data retention timelines based on the purpose of processing. For instance, log data used for analytics might be kept for 30 days, while transactional records may need longer retention.

- Implement automated deletion scripts that align with your internal data retention policies and ensure regular purging of outdated logs and cache.

- Maintain a centralized retention policy document that includes how long different types of AI-processed data are stored.

- Communicate these retention periods to users via your privacy policy.

For example, if your chatbot logs conversations for service improvement, those logs should be deleted after 60 days unless users have opted into longer-term usage analysis.

6. Integrity and Confidentiality

This principle ensures that personal data processed by AI agents is protected from unauthorized access, loss, or exposure. Because AI systems often operate at scale and speed, any lapse in security could lead to widespread breaches.

How to ensure compliance:

- Apply strong encryption standards (e.g., AES-256) for data in transit and at rest to prevent interception or unauthorized access.

- Use identity and access management (IAM) systems to limit what data AI agents, and their underlying systems, can access based on roles or functions.

- Monitor agent activity for unusual behavior that might indicate a breach or misuse of data.

- Segment your data storage and processing environments to isolate sensitive information.

For example, prevent a product recommendation agent from querying payment data by setting granular access controls and validating API calls against pre-approved data scopes.

A layered security approach is key - don't rely on any one control to protect personal data from exposure.

7. Accountability

Accountability is about demonstrating that your AI agent complies with GDPR, not just saying it does. You must be able to show regulators, users, or partners that appropriate processes, safeguards, and reviews are in place.

How to ensure compliance:

- Maintain detailed logs of all agent activity related to personal data, including what data was accessed, processed, or modified.

- Document the AI agent’s purpose, decision-making logic, inputs, outputs, and any machine learning models or datasets used.

- Keep all DPIAs, risk assessments, and data flow diagrams up to date and easily accessible in case of an audit.

- Assign internal ownership for ongoing reviews and compliance updates related to the agent’s performance and behavior.

For example, if your AI agent generates contract drafts based on user input, maintain records of the data used, the drafting logic, and a change history in case a complaint or data access request is submitted.

Embedding these principles proactively ensures you're not just GDPR-compliant on paper but also in practice, helping mitigate legal risks and build user trust through transparency and preparedness.

Respecting User Rights in AI Agent Design

GDPR grants individuals a set of rights over their personal data, such as the right to access, correct, delete, or object to its use. AI agents must be designed in ways that uphold these rights and provide mechanisms for users to exercise them.

1. Right of Access (Art. 15)

Users have the right to know what personal data is being collected, how it's being used, and by whom. This includes not only the data itself, but also the reasoning behind AI-generated decisions if they involve personal data.

What to do:

- Maintain detailed and structured logs of the types of personal data the AI agent collects, processes, or generates.

- Create accessible data reports that explain what data is stored, for how long, and for what purpose, avoiding technical jargon when responding to user requests.

- Where applicable, include a simplified explanation of how the AI agent makes decisions using that data. For example, explain if the agent uses user behavior to determine which support resources to suggest.

- Ensure there is a clear and accessible process, such as a web form or dashboard, for users to submit access requests.

Being transparent about data processing fosters trust and ensures users can exercise their rights meaningfully.

2. Right to Rectification (Art. 16)

If the personal data used by the AI agent is inaccurate or incomplete, users have the right to request that it be corrected without undue delay. This right is essential to maintaining the accuracy and reliability of AI-driven interactions.

What to do:

- Design the agent’s data pipeline to allow for real-time updates or overwrites when corrections are received.

- Ensure that corrected data is automatically synchronized across all systems and logs that the AI agent relies on.

- Set up a process for flagging and prioritizing user-submitted correction requests, especially when inaccurate data impacts service delivery or decisions.

- Retrain or adapt AI models and logic if repeated issues with outdated data begin to skew outputs or predictions.

For example, if a user updates their job title in a profile, the AI assistant that tailors outreach or product suggestions should reflect that change in all future interactions.

3. Right to Erasure (Art. 17)

Users can request deletion of their personal data under specific conditions, such as when it’s no longer needed for the purpose it was collected, or when the user withdraws consent. This is often referred to as the "right to be forgotten."

What to do:

- Implement deletion workflows that fully remove user data not just from active databases but also from AI agent logs, backups, and related processing environments.

- Ensure that if the user's data was used for training or refining the AI agent, it is excluded from future use and retraining datasets.

- Automate the identification of user-linked data to avoid partial deletions and compliance gaps.

- Provide users with an easy-to-use request process, and ensure deletions are confirmed back to the data subject in a timely manner.

For example, if a customer asks for their data to be deleted, the AI-powered recommendation engine should not continue to base future suggestions on their past behavior or profile.

4. Right to Object (Art. 21)

Users have the right to object to the processing of their personal data at any time, particularly when it’s being used for direct marketing or profiling. This right is especially relevant for AI agents that analyze user behavior or preferences.

What to do:

- Provide clear opt-out mechanisms during or before the AI interaction begins, especially for agents involved in marketing, profiling, or behavioral targeting.

- Respect browser-level tracking preferences like Do Not Track (DNT) headers or Consent Management Platform (CMP) settings.

- Modify the AI agent’s behavior upon objection, such as suppressing personalization features or anonymizing user input.

- Maintain logs to ensure that objections are consistently honored across all platforms and AI touchpoints.

For example, if a user opts out of behavioral profiling, your AI-powered product recommender should switch to generic suggestions rather than personalized ones.

5. Right Not to Be Subject to Automated Decision-Making (Art. 22)

Users have the right not to be subject to decisions that are made solely through automated processes, especially when those decisions have legal or similarly significant effects, such as being denied a loan, job, or access to services. This right is particularly relevant to AI agents, as these systems are often deployed specifically to automate high-impact decisions without human oversight. If these agents operate unchecked, they risk making opaque, biased, or unfair decisions that directly affect individuals' lives.

What to do:

- Clearly disclose whenever your AI agent is involved in automated decision-making, including how it influences the outcome.

- Offer a manual override or human-in-the-loop system that allows users to challenge or request a review of the decision.

- Make the appeal or review process easy to find and user-friendly, such as via an online form or support channel.

- Avoid deploying fully automated systems in high-risk scenarios without proper safeguards or exceptions allowed by GDPR.

For example, if an AI agent automatically flags applicants for rejection during hiring, users must be informed and given the option to have their application reviewed by a human.

Designing for this right protects users from opaque or unfair treatment and ensures your AI systems remain transparent and accountable.

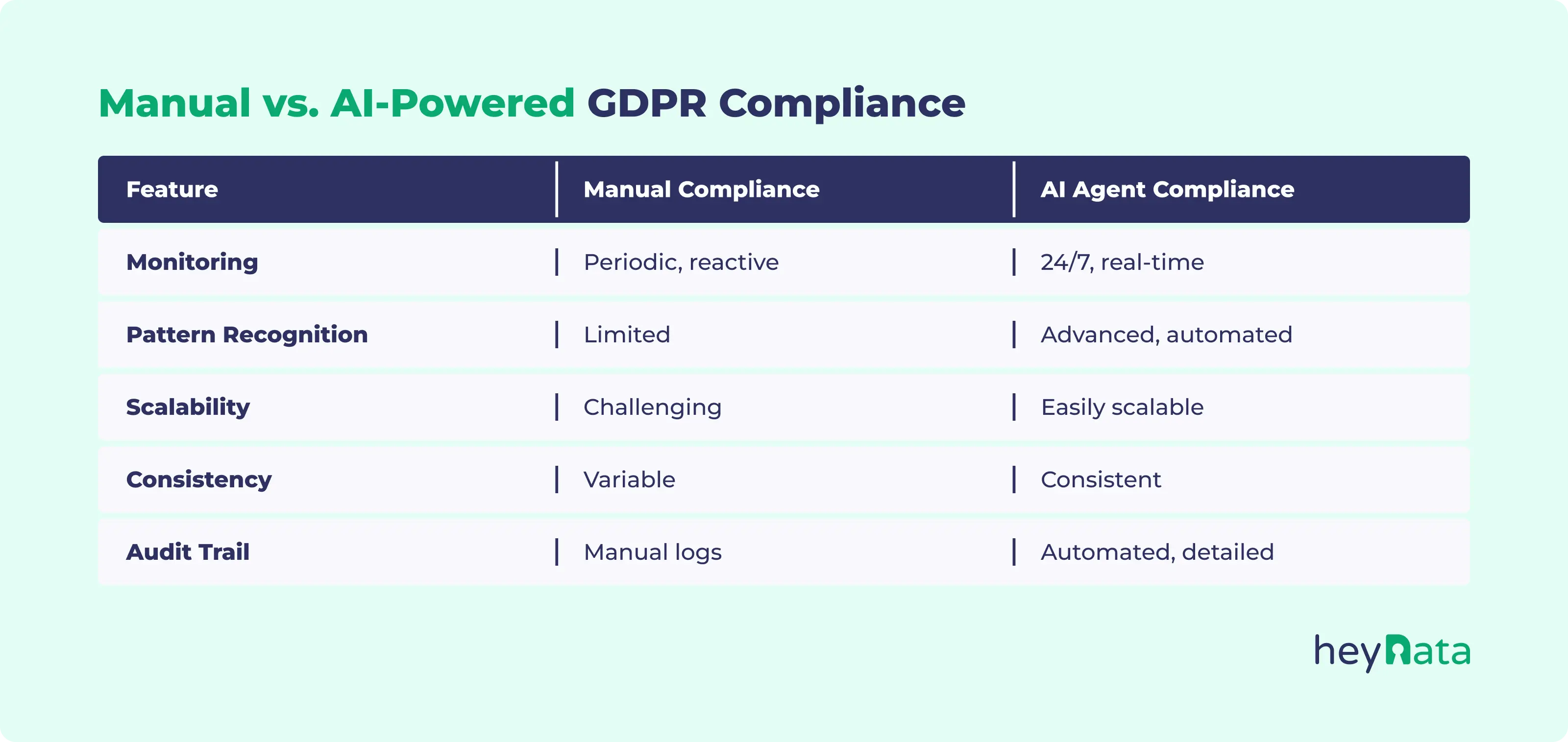

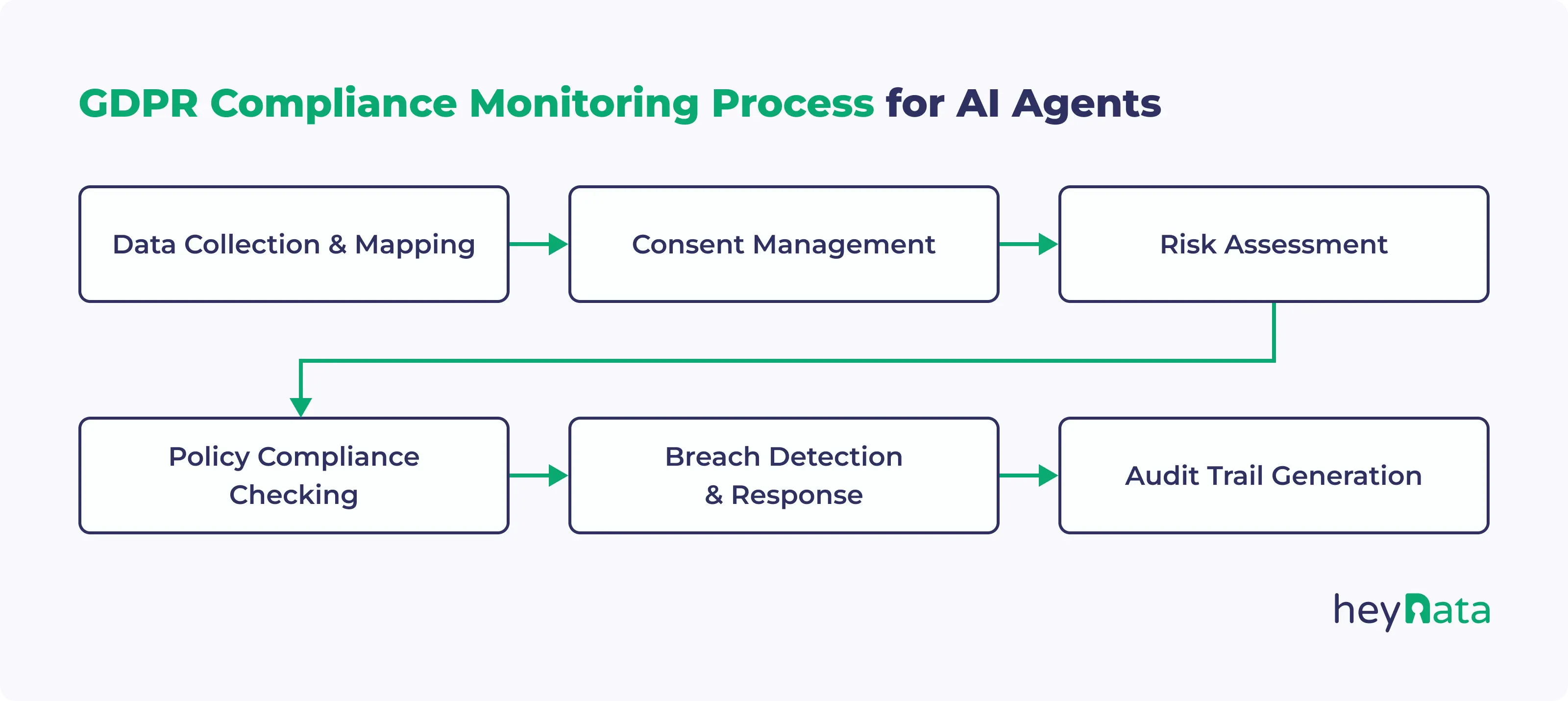

Ongoing Compliance & Monitoring

Compliance doesn’t end at deployment. Ongoing governance is essential to ensure your AI agent continues to meet regulatory and ethical standards as systems evolve.

- Vendor Risk & Processor Obligations: Start by reviewing your third-party tools. If your AI agent uses external APIs or services, make sure there’s a signed Data Processing Agreement (DPA) in place and that the vendor adheres to GDPR requirements. Choose privacy-forward vendors and conduct regular audits to assess their performance. Find compliant vendors with heyData's Vendor Risk Management solution.

- Monitoring & Logging: Next, implement consistent monitoring and logging. Track what the AI agent does, what decisions it makes, what data it accesses, and how it behaves in unusual scenarios. These logs support both transparency and accountability, making it easier to investigate issues or respond to access requests.

- EU AI Act Readiness: You should also begin preparing for the EU AI Act. High-risk systems, like those in HR or finance, will face additional compliance burdens such as risk classification and conformity assessments. These obligations will complement, not replace, GDPR. Preparing now ensures a smoother transition.

- Regular Updates: Finally, treat compliance as a living process. Schedule annual reviews of your DPIAs, update training datasets responsibly, and communicate changes clearly to users and internal stakeholders. AI agents are not set-it-and-forget-it systems, they need governance that keeps pace with your business and the law.

Everything on the EU AI Act in one Guide!

Conclusion

Getting AI Agent GDPR compliance right isn’t easy, but it’s essential. From establishing a lawful basis and minimizing data to implementing transparency, DPIAs, and secure vendor management, your responsibilities as a business don’t end when the agent goes live.

The stakes are high, but so are the benefits. Compliant AI agents reduce legal risk and build trust, deliver better user experiences, and demonstrate your company’s commitment to responsible innovation.

If you're ready to take the next step in ensuring GDPR and AI Act readiness, explore heydata’s All-in-One Compliance Solution. From automated audits to pre-built DPIA templates and vendor monitoring, our solution helps you stay ahead of the curve.

Request a personalized demo today and see how we can support your AI governance journey.

Frequently Asked Questions

What is AI Agent GDPR Compliance?

AI Agent GDPR Compliance refers to ensuring that AI-driven systems that process personal data follow the principles and legal requirements of the General Data Protection Regulation (GDPR). This includes having a lawful basis for processing, respecting user rights, and ensuring transparency, accountability, and data security.

Do AI agents need a Data Protection Impact Assessment (DPIA)?

Yes, if the AI agent performs profiling, makes automated decisions with legal or significant effects, or processes sensitive data, a DPIA is mandatory under GDPR. It helps identify and mitigate privacy risks before deployment.

Can AI agents make automated decisions under GDPR?

Only under strict conditions. Article 22 of GDPR restricts fully automated decisions that significantly affect individuals. You must provide meaningful information about the logic involved and allow users to contest the decision or request human intervention.

How do I ensure my AI agent respects data subject rights?

Design the AI system to support key GDPR rights such as access, rectification, erasure, and objection. Provide user-friendly request mechanisms and ensure the AI system can respond or adapt when a user exercises their rights.

What data security measures should AI agents include?

Ensure data is encrypted in transit and at rest, apply strict access controls, and log agent activity. Segment sensitive data and monitor for anomalies to prevent unauthorized access or misuse.

Are third-party tools used in AI agents covered by GDPR?

Yes. If your AI agent uses APIs, plugins, or services that process personal data, those vendors are considered processors under GDPR. You need a Data Processing Agreement (DPA) and must ensure its compliance.

How does GDPR differ from EU AI Act?

GDPR focuses on data protection and user rights, while the EU AI Act governs the ethical and risk-based deployment of AI systems. High-risk AI agents may fall under both regulations, requiring additional documentation and controls.

Important: The content of this article is for informational purposes only and does not constitute legal advice. The information provided here is no substitute for personalized legal advice from a data protection officer or an attorney. We do not guarantee that the information provided is up to date, complete, or accurate. Any actions taken on the basis of the information contained in this article are at your own risk. We recommend that you always consult a data protection officer or an attorney with any legal questions or problems.