Everything Your Startup Needs to Know About the AI Act!

Get our free whitepaper and learn how to easily and successfully comply with the EU AI Act requirements.

Navigating AI Compliance: A Guide for Startups

In today's rapidly evolving AI landscape, startups must understand and adhere to new regulatory frameworks like the EU's AI Act. Compliance is no longer optional—penalties for non-compliance can reach up to €35 million or 7% of a company’s annual revenue. For any startup using, developing, or distributing AI solutions, establishing a clear AI compliance strategy is critical. This blog post will guide you through practical steps for AI compliance and use the example of a fintech startup, CrediScore-AI, to illustrate how to navigate key compliance steps—from inventory to risk assessment.

The AI Act applies to every company that develops, uses, or distributes AI systems in any of the 27 EU member states. Startups, especially those working with AI-driven products, must be aware of this regulation. Non-compliance comes with severe financial consequences, making it essential to implement compliance measures from the start.

See also: SMEs in the AI Era: The Impact of EU AI Act

Table of Contents:

1. Start with an AI Inventory

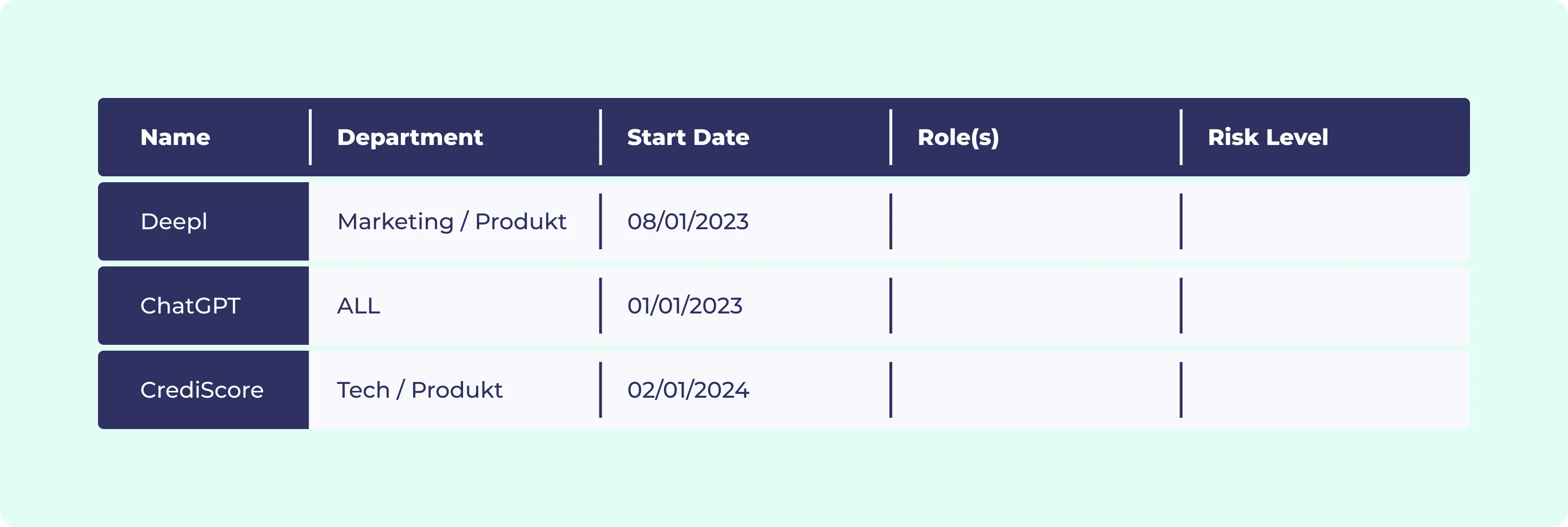

The first step to compliance is identifying and cataloging all the AI systems your company uses, develops, or distributes. According to the AI Act, an AI system is any software that can infer, adapt, and operate non-deterministically. Startups must create a detailed inventory, noting the use cases and processes where AI is applied.

2. Define Your Company’s Role Under the AI Act

The AI Act outlines four roles for organizations interacting with AI: provider, deployer, importer, and distributor. Your startup needs to define its role for each AI system in use. For example, if you create AI software, you are the provider. If you use third-party AI, you're likely a deployer.

Case Study: “CrediScore-AI”

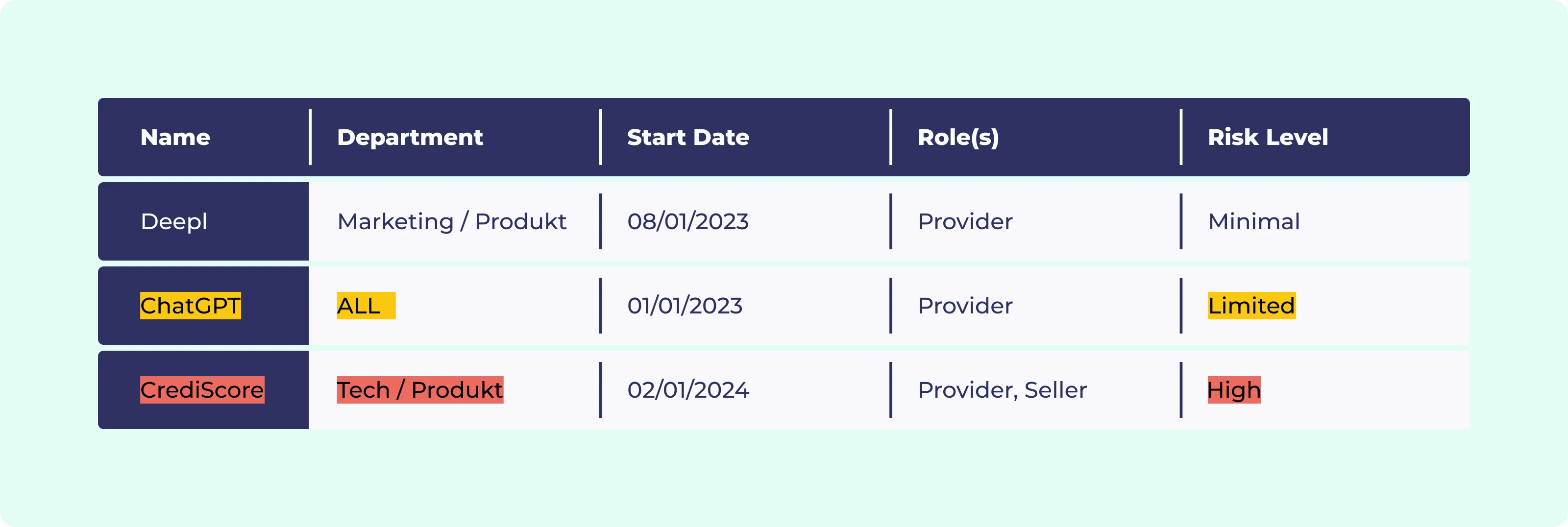

"CrediScore-AI," a fintech startup, started by listing its AI tools, such as ChatGPT and a proprietary credit scoring AI. The company documented each system, its use case, and its role within the organization.

CrediScore acts as both a provider and deployer. For its proprietary AI solution, it plays the role of the provider, while it acts as a deployer for third-party systems like ChatGPT and Deepl.

Figure 1: Start with an inventory of all AI systems at your company

Figure 1: Start with an inventory of all AI systems at your company

Everything Your Startup Needs to Know About the AI Act!

Get our free whitepaper and learn how to easily and successfully comply with the EU AI Act requirements.

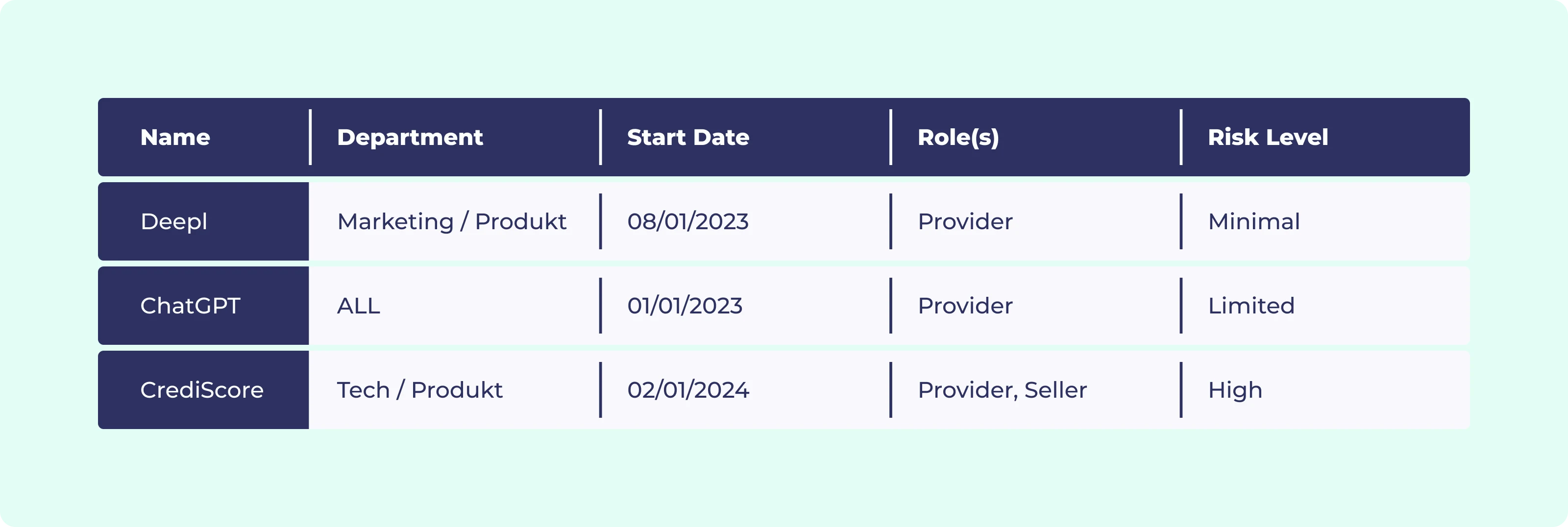

3. Assess AI System Risk Levels

AI systems are categorized into four risk levels: minimal, limited, high, and unacceptable. Your startup must assess the risk level for each AI system it uses. This risk assessment is essential for determining compliance obligations. High-risk systems—such as those used for credit scoring—carry the most stringent requirements.

Case Study: Risk Analysis at CrediScore-AI

AI which provides credit scores for consumers is listed as “high-risk” under Annex III of the AI Act. Since CrediScore has no legal department, it used a third-party software tool to get its risk classification.

ChatGPT however does not fall under this category. However, it does have generative features and is capable of creating “deepfakes”. These are pictures that depict real circumstances, and can seem authentic but are artificially generated. Therefore it was classified as “limited-risk”.

Finally, Deepl has no such capabilities. It can simply translate text from one language to another. It thus is classified as “minimal-risk” under the AI Act.

Figure 2: Fill out the table accordingly by adding your relation to each system as well as their risk level

Figure 2: Fill out the table accordingly by adding your relation to each system as well as their risk level

HINT: Documentation: Your Key to Passing Audits

The AI Act emphasizes documentation. Every decision, process, and compliance action needs to be thoroughly documented, especially for high-risk systems. If your startup undergoes an audit, only what is documented will count.

4. Ensure AI Literacy Across the Organization

Compliance is not just a technical issue—it affects every department. From marketing to product development, every team member must understand how the AI Act impacts their role. Regular training sessions and workshops are critical to ensuring that all employees, particularly those working with AI systems, are equipped to manage compliance-related tasks.

By February 2025 this will become mandatory. Art. 6 AI Act mandates that each company must ensure their employees have a sufficient level of AI literacy for their respective tasks. Non-compliance with this requirement could lead to hefty fines like we mentioned above.

CrediScore-AI’s AI Literacy Initiative

By looking at their AI inventory CrediScore could identify which of their department would need additional training. Deepl is used by marketing and product, two departments that don’t necessarily have native knowledge about AI systems. However, Deepl only provides translations and doesn’t need much training to be used effectively.

ChatGPT however is used by the entire company. Unlike Deepl it can perform a wide range of tasks and is used for many different purposes. Here the risk is bigger: Relying on factual information provided by GPT could be dangerous if employees are not aware that language models can hallucinate. Thus CrediScore decides to ensure all their staff receives training covering the basic risks of Generative AI.

Finally, for their own product,t an even higher level of AI literacy is required since the company is developing a credit scoring system. CrediScores tech team is already well aware of these risks, however their product team is not. Therefore the company has decided to book additional training just for their product team.

Figure 3: For each department CrediScore decides which level of training is required.

Figure 3: For each department CrediScore decides which level of training is required.

See also: Webinar Recap: Preparing Your Business for the AI Act

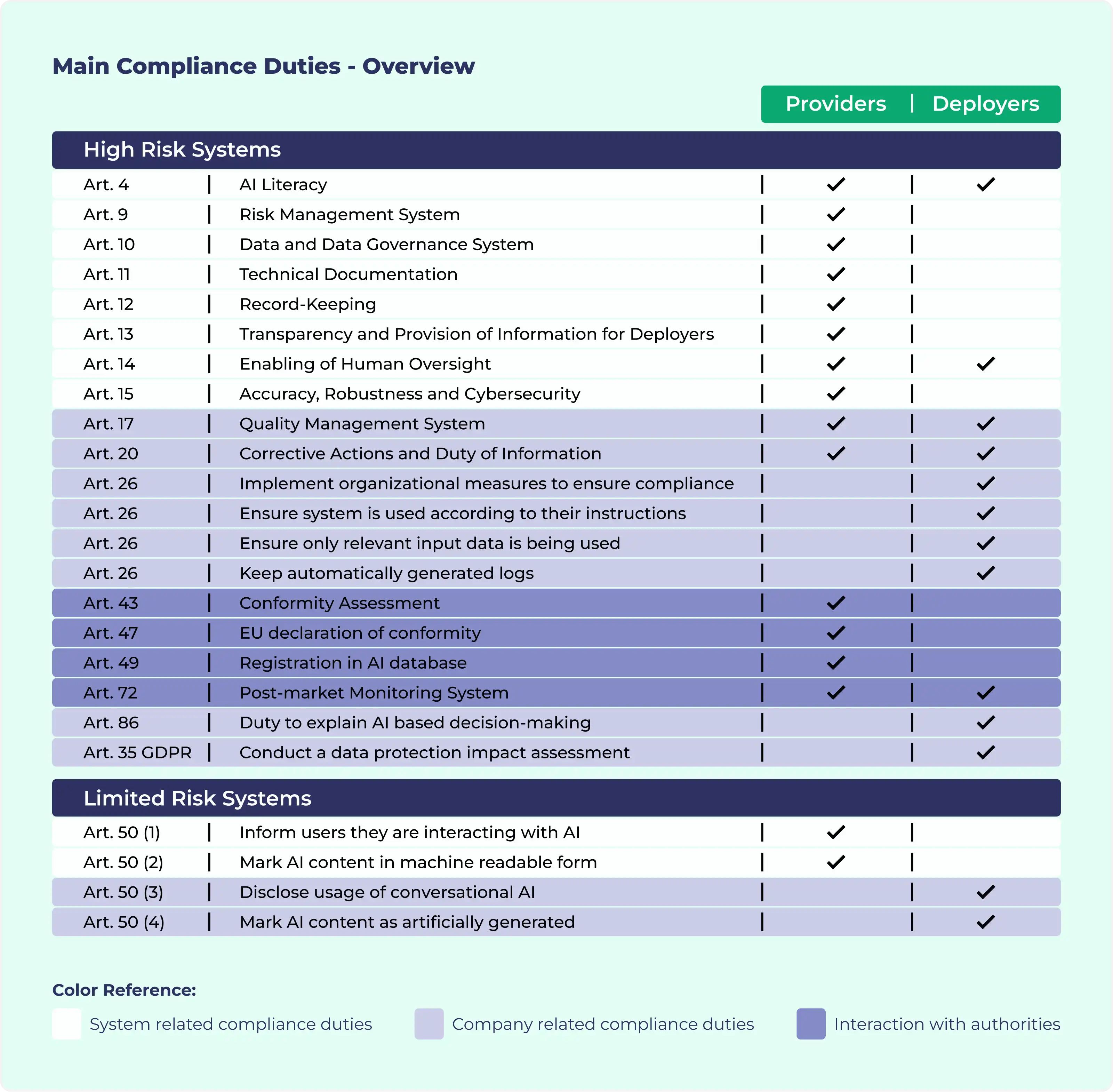

5. Fulfill Additional Obligations Based on Risk Levels

Depending on the risk level of your AI systems, further compliance duties may be required. For high-risk systems, these include and quality- and risk management system, record-keeping, and ensuring human oversight. Providers of high-risk AI, for instance, must offer transparency on how decisions are made and ensure the system’s robustness and cybersecurity.

For high-risk systems, companies must implement measures such as keeping detailed logs, enabling human oversight, and ensuring that AI systems are functioning according to their intended purpose.

Figure 4: Overview of all Compliance Duties for high- and limited-risk systems

Figure 4: Overview of all Compliance Duties for high- and limited-risk systems

Preparing for the AI Future

As the AI landscape continues to develop, startups must keep compliance in mind. Building a strong foundation of AI literacy, documenting every step, and staying informed about evolving regulations will be crucial for long-term success. Your startup can avoid hefty penalties and build user trust by taking the right steps now. With heyData, you can effortlessly meet the complex requirements of the AI Act—from documentation to employee training.

Important: The content of this article is for informational purposes only and does not constitute legal advice. The information provided here is no substitute for personalized legal advice from a data protection officer or an attorney. We do not guarantee that the information provided is up to date, complete, or accurate. Any actions taken on the basis of the information contained in this article are at your own risk. We recommend that you always consult a data protection officer or an attorney with any legal questions or problems.